Quality assurance (QA) is an all-important tenet of any deep packet inspection (DPI) solution. After all, DPI technology is often tasked to monitor critical gateways that lead traffic across network borders. Any shortfall in DPI’s ability to identify the underlying packets, as they throng through the network, can lead to a disastrous impact on the network and erode DPI’s potency in monitoring and safeguarding network assets.

At ipoque, we take pride in our high QA standards. We inherit an ethos that puts quality assurance at the center of all our efforts in creating the next best version of our DPI software. Both R&S®PACE 2, and its VPP-based counterpart, R&S®vPACE are testaments to this, with a string of technological breakthroughs ensuring our software delivers unparalleled accuracy and reliability.

The race against time

Why is QA so important for DPI technology? Unlike most static technologies, a DPI software must respond instantly to the constant change in today’s networking environment to prevent decreasing product quality. As networks evolve to include different IP connectivity stacks, as devices proliferate and as new architectures, such as cloud-native ones, become more prevalent, visibility into traffic flows becomes ever more crucial. New applications are introduced every day, leaving network administrators clueless about the packets that navigate their network. New forms of cyberattacks are unleashed every week, resulting in many unknown threats lurking in the network.

Most networking technologies take weeks, if not months, to respond to these changes. DPI software, however, does not have that privilege. The slightest change in traffic signatures must be reflected quickly so that networks remain traffic aware. At ipoque, we ensure the fastest turnaround times – with signature updates applied every week. We achieve this by having dedicated expert teams who continuously gather and analyze global traffic flows to identify the latest changes in signatures, even the minutest. These findings are thoroughly investigated, implemented, tested and eventually deployed in customer networks. This weekly cycle is repeated infinitely to create the most comprehensive and up-to-date signature repository, ensuring the highest level of classification accuracy with virtually zero false positives and false negatives.

The rigor

Underpinning each cycle of signature updates is the comprehensive QA methodology of ipoque. Our QA methodology comprises two phases. The first phase leverages our capacity to generate real network traffic in a controlled environment, a process that is executed in our test labs by our highly experienced experts. We currently create up to 1000 traces each week and analyze these traffic captures to determine the latest classification updates. We emulate different networks, for example radio access networks, to re-create actual traffic scenarios that help us understand how network characteristics influence application behavior.

As part of our signature update exercise, we track thousands of protocols, applications and service types, focusing on what is most relevant for our customers. Specifically, mobile traffic goes through higher scrutiny due to the relentless stream of new applications and version updates. We address this with our Mobile Automation Framework (MAF), where we autogenerate traffic for a selected 150 high-priority applications, whose daily updates trigger test runs 24/7. Our test team produced more than 500,000 test traces since 2012, half of them in the last four years. We also take into consideration the special attributes of regional traffic with our MAF nodes distributed across key regions, such as America, Europe, Africa and Asia. These nodes are driven by secure networking infrastructure from Rohde & Schwarz to create regional specific data and transfer it all back into our central trace management system in Europe. Given our advancements in test automation, we also expect a strong need for clean and controlled but realistic machine learning (ML) training data in the coming years, especially with more traffic becoming encrypted or obfuscated.

Prevention better than cure

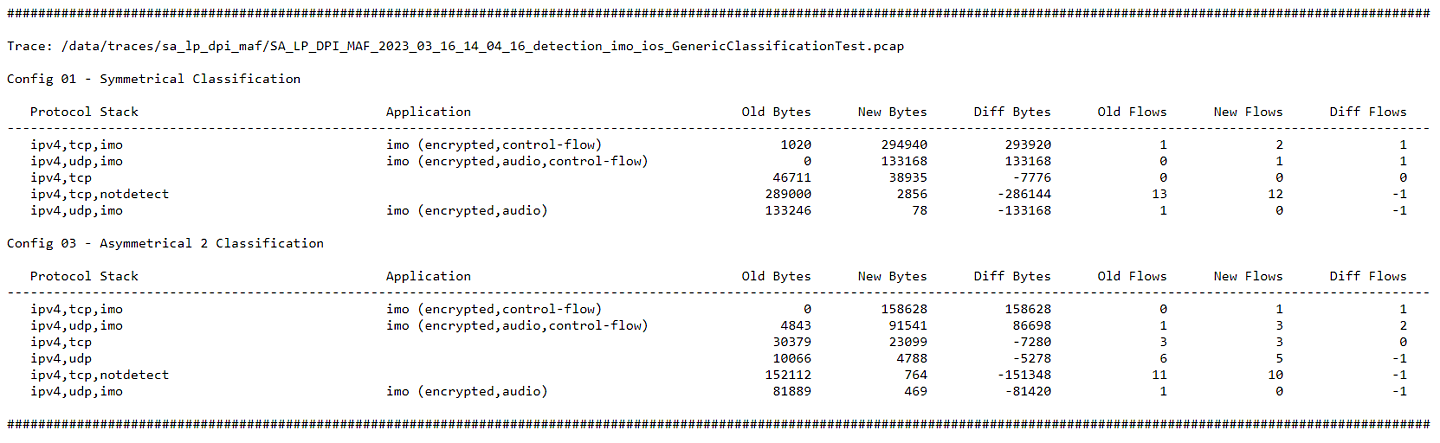

The second phase in our QA process focuses on ensuring a weekly, fully-updated classification portfolio. To abide by such stringency, we have implemented built-in QA which is principled upon prevention rather than cure, where incidences of bugs and code errors are systematically minimized. As part of our commitment to this, we have integrated automated regression testing in our continuous integration/deployment (CI/CD) pipelines. This enables a 100% classification test coverage for each code merge and allows us to focus on specific anomalies. As a result, we are able to process 3.5 terabytes of test material up to 100 times per week, with millions of test results analyzed automatically (see Pic1 for an example). Our built-in QA machines utilize distinct sets of test material which are curated to reflect application behavior in different environments. This provides every DPI engineer with the capacity to focus on detected anomalies.